Openmmlab Classification Format

In the realm of computer vision, the development of robust and efficient algorithms for image classification has always been a pivotal pursuit. With the emergence of frameworks like OpenMMLab, the landscape has witnessed a transformative shift, empowering developers and researchers with state-of-the-art tools and methodologies. In this article, we delve into the intricacies of classification format within OpenMMLab, exploring its architecture, capabilities, and best practices.

Table of Contents

ToggleUnderstanding OpenMMLab

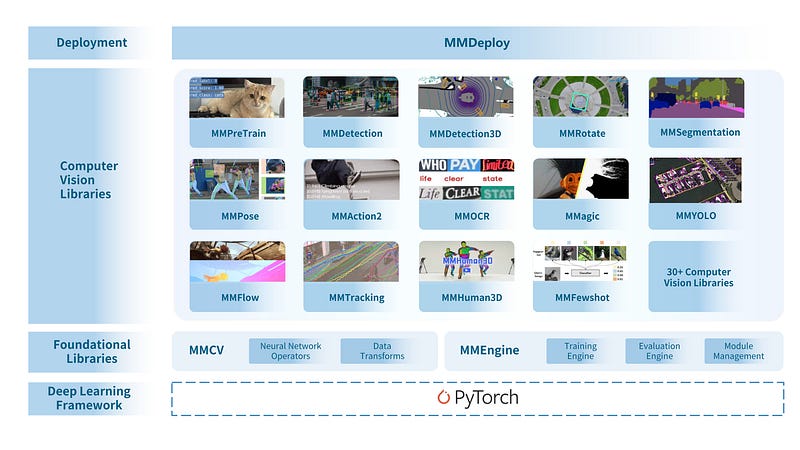

OpenMMLab stands as a pioneering open-source ecosystem tailored for multimedia research, encompassing a wide array of tasks including image and video understanding, 3D vision, and more. At its core, OpenMMLab advocates for modularity, extensibility, and usability, fostering an environment conducive to rapid experimentation and innovation.

Classification Format in OpenMMLab

Central to OpenMMLab’s classification module is its adherence to a standardized format that streamlines the training and evaluation process. At its essence, this format encompasses several key components.

Dataset Preparation

OpenMMLab supports various dataset formats, including but not limited to ImageNet, CIFAR, and custom datasets.

Data augmentation techniques such as random cropping, resizing, and flipping are readily available to enhance dataset diversity and model generalization.

Model Configuration

Users can seamlessly configure classification models from an extensive repository of pre-trained models, ranging from classic architectures like ResNet and VGG to cutting-edge designs like ResNeSt and Swin Transformer.

Customization options enable fine-tuning of hyperparameters, facilitating model adaptation to specific tasks and datasets.

Training Procedure

OpenMMLab simplifies the training process through a unified interface, allowing users to train models with minimal code overhead.

Support for distributed training across multiple GPUs or even clusters enhances scalability and accelerates convergence.

Evaluation Metrics

Evaluation metrics such as top-1 and top-5 accuracies provide comprehensive insights into model performance, enabling rigorous benchmarking and comparison.

Best Practices and Tips\ To maximize the efficacy of classification tasks within OpenMMLab, adhering to the following best practices is paramount:

Data Preprocessing: Ensure standardized preprocessing techniques such as mean subtraction and normalization to facilitate model convergence.

Model Selection

Experiment with diverse architectures and conduct thorough ablation studies to identify the most suitable model for the given task.

Hyperparameter Tuning

Fine-tune learning rates, batch sizes, and optimization algorithms to strike a balance between convergence speed and generalization performance.

Regularization Techniques: Incorporate regularization techniques like dropout and weight decay to mitigate overfitting and enhance model robustness.

Conclusion

Classification format within OpenMMLab represents a cornerstone in the pursuit of advancing computer vision research and applications. By embracing a standardized approach, harnessing state-of-the-art methodologies, and adhering to best practices, developers and researchers can unlock the full potential of classification tasks, paving the way for groundbreaking innovations in the field. As OpenMMLab continues to evolve, its classification capabilities are poised to redefine the boundaries of what is achievable in the realm of computer vision.